Are you looking for an answer to the topic “How Do Mappers Work In Sqoop?“? We answer all your questions at the website Chiangmaiplaces.net in category: +100 Marketing Blog Post Topics & Ideas. You will find the answer right below.

The number of mappers (default is four, but you can override) leverage the split-by column and basically Sqoop tries to build an intelligent set of WHERE clauses so that each of the mappers have a logical “slice” of the target table.Apache Sqoop uses Hadoop MapReduce to get data from relational databases and stores it on HDFS. When importing data, Sqoop controls the number of mappers accessing RDBMS to avoid distributed denial of service attacks. 4 mappers can be used at a time by default, however, the value of this can be configured.Controlling Parallelism. Sqoop imports data in parallel from most database sources. You can specify the number of map tasks (parallel processes) to use to perform the import by using the -m or –num-mappers argument.

Table of Contents

What is the use of mappers in Sqoop?

Apache Sqoop uses Hadoop MapReduce to get data from relational databases and stores it on HDFS. When importing data, Sqoop controls the number of mappers accessing RDBMS to avoid distributed denial of service attacks. 4 mappers can be used at a time by default, however, the value of this can be configured.

How do I control mappers in Sqoop?

Controlling Parallelism. Sqoop imports data in parallel from most database sources. You can specify the number of map tasks (parallel processes) to use to perform the import by using the -m or –num-mappers argument.

Apache Sqoop Tutorial | Sqoop: Import Export Data From MySQL To HDFS | Hadoop Training | Edureka

Images related to the topicApache Sqoop Tutorial | Sqoop: Import Export Data From MySQL To HDFS | Hadoop Training | Edureka

What are the default mappers in Sqoop?

when we don’t mention the number of mappers while transferring the data from RDBMS to HDFS file system sqoop will use default number of mapper 4. Sqoop imports data in parallel from most database sources.

How mappers are decided?

It depends on how many cores and how much memory you have on each slave. Generally, one mapper should get 1 to 1.5 cores of processors. So if you have 15 cores then one can run 10 Mappers per Node. So if you have 100 data nodes in Hadoop Cluster then one can run 1000 Mappers in a Cluster.

How do you decide number of mappers in sqoop job?

The optimal number of mappers depends on many variables: you need to take into account your database type, the hardware that is used for your database server, and the impact to other requests that your database needs to serve. There is no optimal number of mappers that works for all scenarios.

How do I set number of mappers in sqoop export?

It can be modified by passing either -m or –num-mappers argument to the job. There is no maximum limit on number of mappers set by Sqoop, but the total number of concurrent connections to the database is a factor to consider.

What are the default number of mappers and reducers in the Sqoop?

How many default mappers and reducers in sqoop? (4-mappers, 0-reducers).

See some more details on the topic How Do Mappers Work In Sqoop? here:

m or num-mappers – Informatica documents

The m or num-mappers argument defines the number of map tasks that Sqoop must use to import and export data in parallel.

Default Number of mapper and reducer in SQOOP job

the number of mappers is equals to the number of part files on the hdfs file system. the number of mappers is to transfer the data from source …

Steering number of mapper (MapReduce) in sqoop for …

To import data from most the data source like RDBMS, sqoop internally use mapper. Before delegating the responsibility to the mapper, sqoop performs few …

Sqoop Interview Questions and Answers for 2021 – ProjectPro

Apache Sqoop uses Hadoop MapReduce to get data from relational databases and stores it on HDFS. When importing data, Sqoop controls the number …

How can I improve my Sqoop export performance?

To optimize performance, set the number of map tasks to a value lower than the maximum number of connections that the database supports. Controlling the amount of parallelism that Sqoop will use to transfer data is the main way to control the load on your database.

What is incremental load in Sqoop?

Incremental load can be performed by using Sqoop import command or by loading the data into hive without overwriting it. The different attributes that need to be specified during incremental load in Sqoop are- Mode (incremental) –The mode defines how Sqoop will determine what the new rows are.

What is the default number of mappers and reducers in MapReduce job?

In Hadoop, if we have not set number of reducers, then how many number of reducers will be created? Like number of mappers is dependent on (total data size)/(input split size), E.g. if data size is 1 TB and input split size is 100 MB. Then number of mappers will be (1000*1000)/100 = 10000(Ten thousand).

How many mappers will come into the picture for importing the data coming from table size 128 MB?

Consider, hadoop system has default 128 MB as split data size. Then, hadoop will store the 1 TB data into 8 blocks (1024 / 128 = 8 ). So, for each processing of this 8 blocks i.e 1 TB of data , 8 mappers are required.

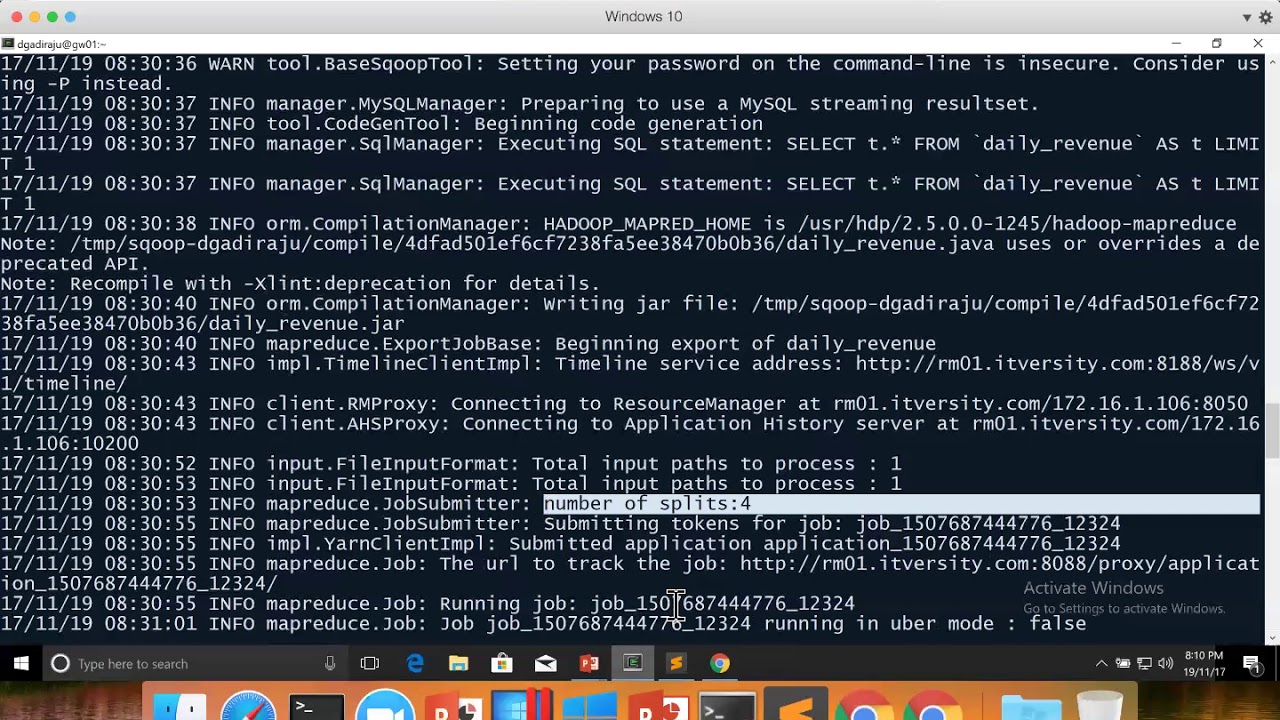

23 Apache Sqoop – Sqoop Export – Column mapping

Images related to the topic23 Apache Sqoop – Sqoop Export – Column mapping

What is the use of HCatalog?

The goal of HCatalog is to allow Pig and MapReduce to be able to use the same data structures as Hive. Then there is no need to convert data. The first shows that all three products use Hadoop to store data. Hive stores its metadata (i.e., schema) in MySQL or Derby.

How many mappers are executed?

Usually, 1 to 1.5 cores of processor should be given to each mapper. So for a 15 core processor, 10 mappers can run. A number of mappers can be controlled by changing the block size.

How does Hadoop calculate number of mappers?

The number of mappers = total size calculated / input split size defined in Hadoop configuration. In the code, one can configure JobConf variables.

How many mappers will run for a file which is split into 10 blocks?

For Example: For a file of size 10TB(Data Size) where the size of each data block is 128 MB(input split size) the number of Mappers will be around 81920.

Can reducers be more than mappers?

Suppose your data size is small, then you don’t need so many mappers running to process the input files in parallel. However, if the <key,value> pairs generated by the mappers are large & diverse, then it makes sense to have more reducers because you can process more number of <key,value> pairs in parallel.

Is it possible to change the number of mappers to be created in a MapReduce job?

Yes number of Mappers can be changed in MapReduce job. There can be 100 or 1000 of mappers running parallelly on every slave and it directly depends upon slave configuration or on machine configuration on which the slave is running and these all slaves would be writing output on local disk.

What describes number of mappers for a MapReduce job?

The number of Mappers for a MapReduce job is driven by number of input splits. And input splits are dependent upon the Block size. For eg If we have 500MB of data and 128MB is the block size in hdfs , then approximately the number of mapper will be equal to 4 mappers.

What is fetch size in sqoop?

Specifies the number of entries that Sqoop can import at a time.

How many mappers and reducers will be submitted for sqoop copying to HDFS?

For each sqoop copying into HDFS how many MapReduce jobs and tasks will be submitted? There are 4 jobs that will be submitted to each Sqoop copying into HDFS and no reduce tasks are scheduled.

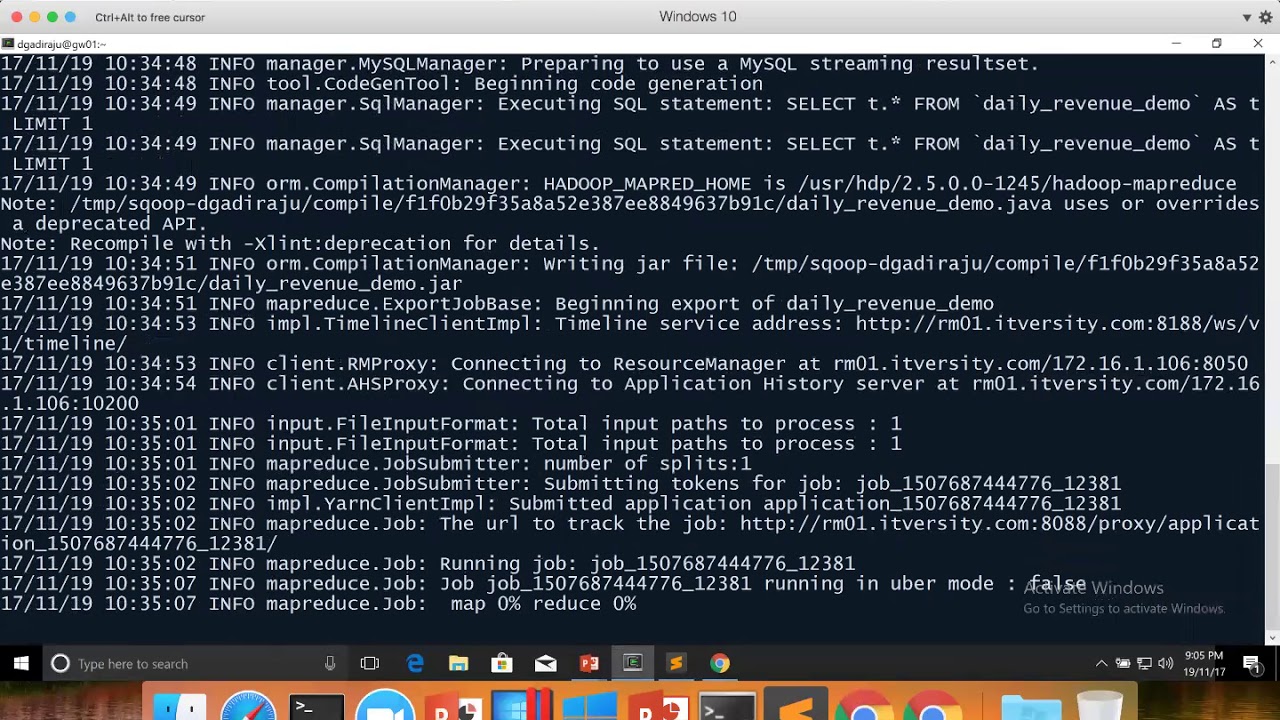

22 Apache Sqoop – Sqoop Export – Export behaviour and number of mappers

Images related to the topic22 Apache Sqoop – Sqoop Export – Export behaviour and number of mappers

What is split size in sqoop?

From sqoop docs. Using the –split-limit parameter places a limit on the size of the split section created. If the size of the split created is larger than the size specified in this parameter, then the splits would be resized to fit within this limit, and the number of splits will change according to that.

Why there is no reducers in sqoop?

The reducer is used for accumulation or aggregation. After mapping, the reducer fetches the data transfer by the database to Hadoop. In the sqoop there is no reducer because import and export work parallel in sqoop.

Related searches to How Do Mappers Work In Sqoop?

- split-by in sqoop

- how do mappers work in sqoop import

- how do mappers work in sqoop export

- sqoop import command

- sqoop export number of mappers

- split by in sqoop

- sqoop commands

- sqoop tutorial

- how do mappers work in sqoop eval

- how do mappers work in sqoop 3

- how do mappers work in sqoop oracle

- sqoop interview questions

- conditions in sqoop

- sqoop import query example

Information related to the topic How Do Mappers Work In Sqoop?

Here are the search results of the thread How Do Mappers Work In Sqoop? from Bing. You can read more if you want.

You have just come across an article on the topic How Do Mappers Work In Sqoop?. If you found this article useful, please share it. Thank you very much.